Today I read that Opera Development Browser added local AI. I ended up deleting it after trying it since it was in a window within the browser. Afterwards, I tried LocalAI.app and a few others and found Ollama!

Local AI's are interesting in that if the internet is down, you still have access to it. In our Xanadu Web Apps, we use local libraries as much as possible so it's less likely for a resource to disappear. Nothing is worse than going to run your app and not be able to if a Google Font cannot be reached. There are some things that cannot be local like payment processing, address correction, etc.

Ollama is interesting. Downloads are available for Mac, Windows or Linux. I downloaded for Mac and as suggested on the github page, I typed the following in a new Terminal window:

ollama run llama2The command downloaded the llama2 model and I was able to chat with it! Pretty simple. They have a a large number of models available but I was interesting in the coding models. I found they had codellama, so I tried:

ollama run codellamaThere's actually many different versions of codellama: 7b-instruct, 13b-instruct, 34b-instruct, 70b-instruct. That represents the number of parameters in billions and instuct is a conversational model. I tried 70b in another app and it was super slow. So I ended up actually installing 13b:

ollama run codellama:7b-instructAt this point you can ask questions at the prompt like: "write a php function that outputs the fibonacci sequence".

Later I found you can ask questions in a few different ways via the command or via curl:

ollama run codellama:13b-instruct "write a php function that outputs the fibonacci sequence"

curl http://localhost:11434/api/generate -d '{ "model": "codellama:13b-instruct", "prompt": "write a php function that outputs the fibonacci sequence", "stream": false }'

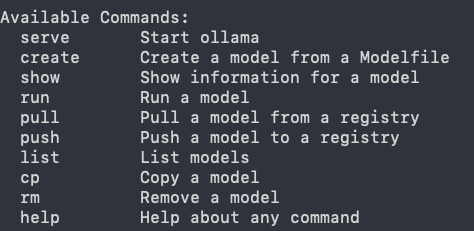

curl http://localhost:11434/api/chat -d '{ "model": "codellama:13b-instruct", "messages": [ { "role": "user", "content": "write a php function that outputs the fibonacci sequence" } ] }'Last thing... Checkout the commands that make it easy to list and delete models:

I have a feeling that Ollama will be useful to add AI to our Xanadu Web Apps!!!